Creative Testing in the Age of Algorithms (2026)

Creative testing is a topic that most advertisers think they understand. Create multiple ads and run them in a campaign – the winner will have the highest CTR or the lowest CPA.

That logic may work some of the time, but misses a key point: not all ads are meant to do the same thing.

Delivery is influenced by early engagement signals, reinforcement learning loops, and optimization bias. In fact, ad spend is often disproportionately tied to hook rate, not final conversions.

Creative testing today is no longer a blanket A/B exercise. It’s part of a broader creative and messaging strategy – one that separates experimentation from optimization.

This article outlines a modern framework for testing creative in 2026 and explains how to structure campaigns so performance reflects message quality, not funnel proximity.

TL;DR

Most creative tests fail because algorithms don’t split traffic evenly and attribution windows distort results.

When different creative functions compete in the same campaign, performance often reflects funnel proximity, not message quality.

In 2026, creative testing requires two environments:

• Laboratory testing: isolate one creative function to validate messaging.

• System deployment: consolidate top performers to maximize algorithmic efficiency.

The laboratory creates clarity. The system creates scale.

Why Creative Testing Broke

During the 2010’s, hyper-segmentation of campaigns was a viable strategy. It offered marketers more control over audiences, messages, and results. Read why this hyper-fixation on control felt safer.

Then came updates like Performance Max and Meta Andromeda. The paradigm shifted away from consolidation and exposed three major flaws with creative testing as we knew it.

Algorithms Don’t Split Traffic Evenly

Ad platforms like Google and Meta optimize ads based on engagement. That means if an ad receives slightly better early engagement, it receives more impressions and often suffocates other ads in the ad set.

The test becomes self-fulfilling rather than purely objective.

Google’s even noted that campaigns using automated bid models will optimize ads based on performance. The option to ‘rotate evenly’ doesn’t apply.

Exploration vs Exploitation

In simple ad systems, testing new creative is easy. Most topics are new and pick up steam quickly if they outperform the existing creative variants.

In more complex systems however, the algorithm is already pushing known winners. Introducing a new topic that outperforms existing creative is much harder.

In these environments, creative testing requires a separate campaign, or much larger change in budget. Read how decision making changes as you scale.

Attribution Window Distortion

Sales cycle plays an important role in the testing process. Long delay between ad engagement and the ultimate conversion can distort results, making ads look poor to begin.

Understanding your average time lag to conversion is necessary to setting expectations. For example, a product with 14-day average lag won’t benefit from any creative analysis until the 28-day mark (when 14 days of credit is attributed).

To find your average time lag, I’ve written guides on where to find conversion lag in Google and how to calculate attribution lag in Meta.

With these considerations in mind, let’s look at how to structure creative in a modern advertising strategy.

The Four Types of Creative

Not all ads are designed to accomplish the same outcome and because of that, they shouldn’t be tested against each other.

Effective creative testing starts by defining the role an ad is meant to play in the buying process. That role isn’t determined by business goals alone, but by how advertising influences human behavior.

Advertising moves people through stages: awareness, consideration, decision. Some messages introduce the problem. Others reinforce credibility. Others communicate the offer. And some accelerate action.

When these functions are mixed together in a single test, performance differences reflect proximity to conversion, not creative quality.

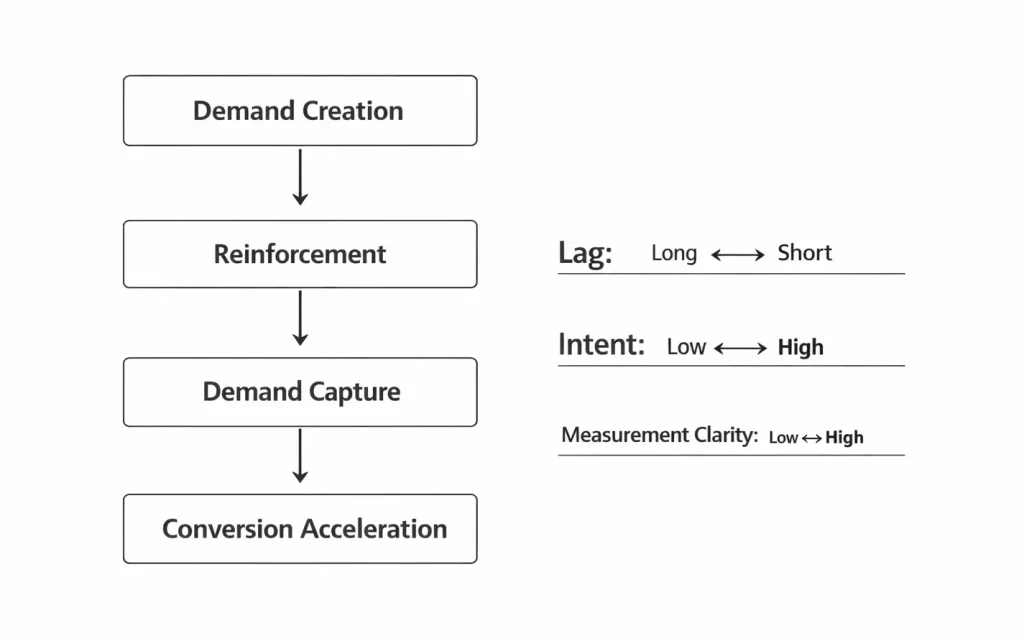

To test correctly, creative must first be categorized by function. This framework breaks messaging into four distinct roles.

Demand Creation

Demand creation marks the first step in any user’s journey. Often, this is the point at which the user doesn’t know they have a problem, or has just found out.

Demand comes from two major inputs: awareness and consideration. To this end, these ads should serve to further those two purposes.

- Purpose: Introduce brand, expand category consideration

- Metrics: Hook rate, dwell, micro-conversions, onsite engagement

- Lag: Long

Get more info on how to measure creative in my Meta Andromeda Creative Analysis breakdown.

Reinforcement

In the path to conversion, reinforcement acts as a consideration builder. Most people won’t jump straight from awareness to being ready to buy, reinforcement gives them more reasons to buy.

Creative often includes social proof, testimonials, user-generated content that increases credibility. It’s estimated that 95-98% of shoppers read reviews before buying. Including this in your ad messaging only helps move the process along faster.

- Purpose: Build credibility, accellerate consideration

- Metrics: Cost per click, conversion rate

- Lag: Medium

Demand Capture

Because there is always a portion of the market ready to buy at any given time, demand capture creative are absolutely necessary to state your offer.

This could be in the form of a direct call-to-action (e.g. buy now, sign up), but benefits from some persuasive messaging. Comparisons and value-based messaging are highly effective at continuing to educate while calling users to action.

- Purpose: Target high-intent users, communicate the offer

- Metrics: CPA, ROAS

- Lag: Short

Keep in mind, these creative often show the best conversion performance only because they resonate most with users at the end of their journey.

Conversion Acceleration

When demand capture isn’t as effective as it needs to be, conversion acceleration is a useful tactic. Messages like incentives and urgency aim to enact action faster than it otherwise would.

Think of ads that include discounts like 15% off or countdown timers leading up to the start of an event. These are powerful messages to spur action, but can come at a cost.

Use them sparingly as they can eat into bottom line profitability.

- Purpose: Reduce friction, offer urgency

- Metrics: CVR

- Lag: Very Short

These ads are a fantastic choice for retargeting-only campaigns as they aim to speed up decision, not reach your entire target audience.

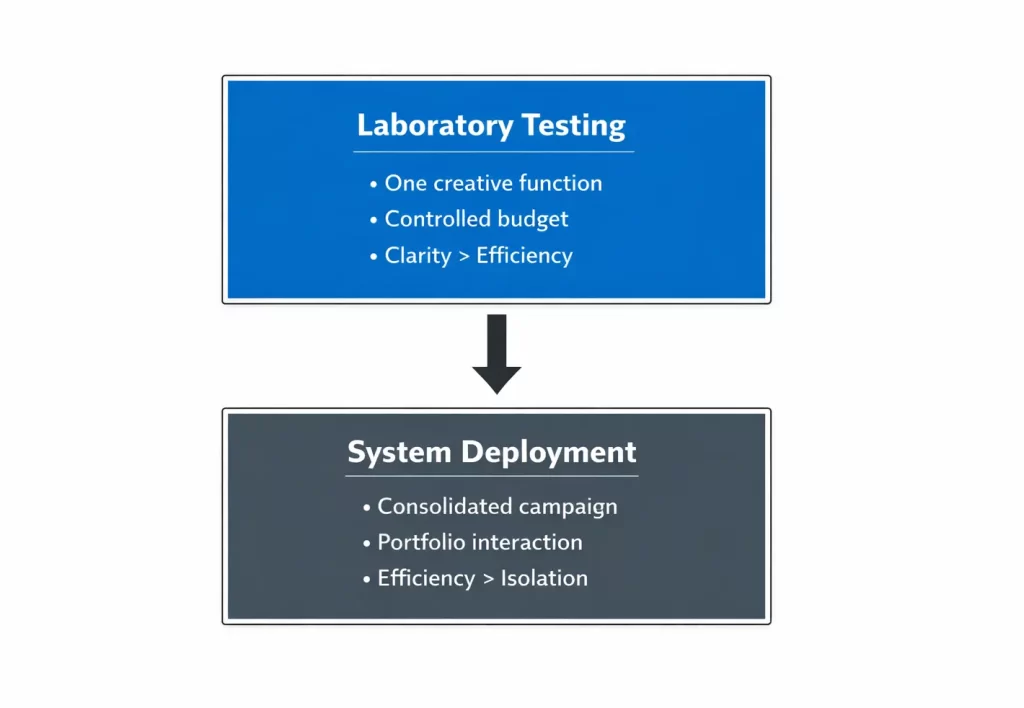

The Two Layer Model to Creative Testing

Creative functions cannot be tested together without contaminating the result. When demand creation, reinforcement, and acceleration creatives share the same delivery pool, performance differences reflect audience makeup, proximity to purchase, and optimization signal – not creative quality.

For true creative testing, separate the creative functions into their own tests.

Why Demand Creation is Most Important to Test

Demand creation is the most important messaging to test independently because of it’s distance from the point of conversion.

Where demand capture and conversion acceleration messages are easy to measure with conversions, demand creation ads are highly variable, and often need softer metrics like hook rate or click-through rate to validate performance.

That means these ads typically get less exposure. However, it doesn’t make them less valuable.

The greatest long-term leverage lives in demand creation. That’s where angle, positioning, and narrative change who you attract – not just who you convert.

It’s also the easiest to misjudge because the wrong evidence was used.

Laboratory Testing

In this campaign type, isolate one creative function and run ads with their own dedicated budget. That means:

- Create a separate campaign

- Use a dedicated budget

- Use the same optimization event (purchase, lead)

Measure results using one or two KPIs. The top performing ads will provide learnings about what angles work and how to iterate future messages.

The Testing Efficiency Trade-off

Most advertisers skip this phase because it often hurts efficiency. Especially when running demand creation topics that are far from the point of conversion.

That’s because laboratory campaigns have:

- An audience concentrated in one stage of the journey

- Lower retargeting support

The trade-off however is reducing strategic risk. Understanding how ads perform in a controlled environment improves confidence in the results and insight.

Once winners are identified, plug them into the larger system.

System Development

The system takes learnings from the laboratory, and produces efficient results using a consolidated campaign structure.

Instead of isolating one creative function, this layer blends demand creation, reinforcement, demand capture, and conversion acceleration into a single learning environment.

This is where the algorithm does what it does best: determine the right messaging mix for the audience based on intent, behavior, and proximity to conversion.

In this environment, creative delivery is most impacted by audience makeup. If the audience is less aware, more demand creation ads will deliver. If there is high awareness and consideration, demand capture and acceleration creatives win most attention.

Budget Consideration

Most of your budget should live in the system – to the tune of 70-90% depending on how aggressively you are creating new content.

Higher testing budget accelerates creative learning, but ultimately sacrifices efficiency.

The value in creative testing is understanding what’s working. Feeding a system validated creative that has shown strong performance not only improves confidence, but compounds advantage when performance improves.

In 2026, the advantage no longer belongs to the advertiser who creates the most ads. It belongs to the advertiser who tests creative intentionally before handing it to the algorithm.

The laboratory shapes the inputs, the system multiplies them.

When Not to Use the Two Layer Model

While creative testing has notable benefits, it’s not required for every advertiser. It’s highly dependent on budget and campaign maturity.

New advertisers and small budget advertisers won’t see the same benefits from creative testing because they haven’t reached the exploitation scale stage of campaign performance.

In other words, new creative introduced into the system still have a high chance of being the next ‘best’ creative.

Some rules of thumb for when not to creative test:

- Spending under $50k/month

- Running short-term promotions

- Low consideration product categories

- Generating <30 conversions per week

In these cases, creative testing could actually hurt more than it helps. Laboratory testing becomes most valuable when you are:

- Scaling

- Entering new markets

- Repositioning messaging

- Launching new product categories

Creative testing is a strategic layer, not a requirement.

What Creative Testing Means in 2026

Creative testing hasn’t disappeared with the rise of algorithms – it’s just become more complicated.

Automated bidding, blended audiences, and shifting user behaviors have all made traditional A/B tests less reliable. Differences in performance now more clearly reflect where a user is in their journey, not how strong the message really is.

The advertisers who win in 2026 aren’t just the ones that are producing the most creative. They’ll be the ones that learn from the creative they produce.

Laboratory testing creates controlled insight. System deployment creates efficient scale.

Confuse the two and you optimize noise. Separate them, and you train the algorithm with intention.