The Complete Guide to Reddit Comment Scraping for Beginners

There are countless guides that explain how to scrape Reddit comments, but most get lost in technical jargon and complex coding concepts. This guide takes a different approach – providing the simplest solution to get you started collecting Reddit data, even if you’ve never written a line of code.

But first, let’s talk about why you’d want to collect Reddit data in the first place.

Why Reddit Data Matters for Market Research

Anyone that’s searched for product recommendations knows the internet is filled with affiliate garbage – recommending products for a monetary kickback. Most savvy internet users know the first place to check for real opinions is a Reddit thread. With over 500 million monthly active users and 100,000+ active communities, Reddit represents one of the largest sources of authentic user discussions online.

The Power of Reddit Comments

Reddit comments are one of the best sources of user generated content and it’s no surprise that language models like ChatGPT train on Reddit data. Unlike curated reviews, Reddit discussions tend to be more candid and detailed, offering insight into consumer behavior and preferences.

This makes Reddit data particularly valuable for:

- Market research and competitive analysis

- Product feedback and feature requests

- Understanding customer pain points

- Content ideation and trend spotting

- Brand sentiment analysis

While manual analysis of Reddit threads is possible, web scraping automates this process. This approach has been a popular method for extracting information from websites as long as search engines have existed, but modern tools make it more accessible than ever.

Getting Started with Reddit Scraping

This article will include step by step instructions on how to scrape reddit comments including:

- How to set up Reddit API access

- Building a PRAW (python reddit API wrapper)

- Running the Reddit Scraper

- Data Extraction & Analytics

Step 1: Setting up Reddit API Access

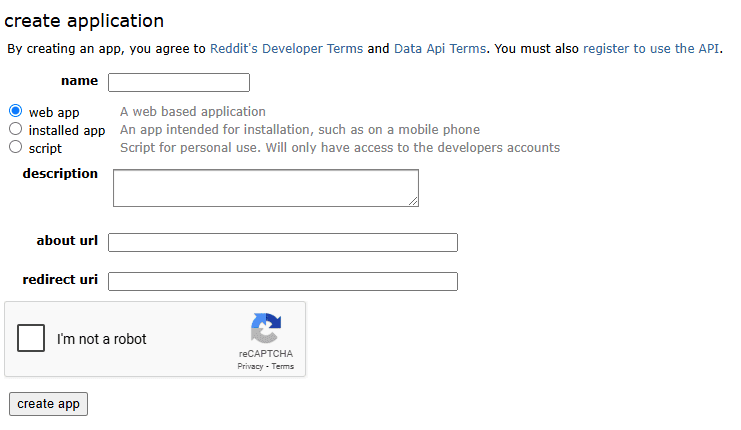

To set up API access, first visit the developer portal.

Upon logging in, you should see a prompt to ‘create an app’. Clicking this will open the following interface:

The following fields are required:

- Name (i.e. Reddit Scraper)

- Redirect URL (for a default, use http://localhost:8080)

Clicking ‘create’ will generate the following:

- API ID (client ID)

- Secret key (client secret)

From here, you can set up the web scraper.

Step 2: Coding a Reddit Scraper

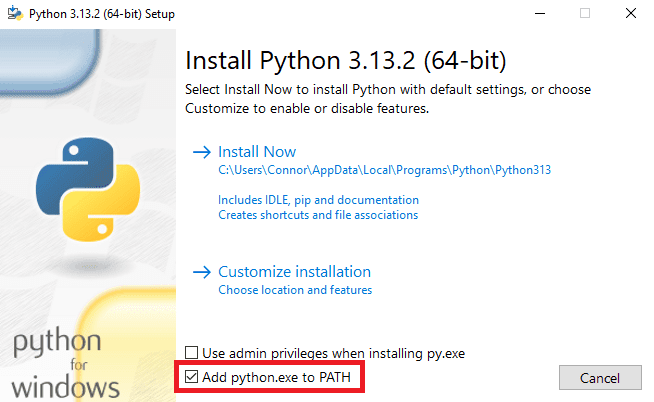

Download Python

Before you begin, download the latest version of Python. During the download process, make sure to check the setting to ‘add python.exe to PATH’ – this will ensure command prompt recognizes python files.

Create the Python Reddit API Wrapper (PRAW)

Next, create the PRAW as a text file. I recommend pasting the following into the Notebook app.

import praw

from datetime import datetime

def scrape_subreddit_titles_comments(subreddit_name, post_limit=100):

reddit = praw.Reddit(

client_id="YOUR_CLIENT_ID",

client_secret="YOUR_CLIENT_SECRET",

user_agent="YOUR_USER_AGENT"

)

subreddit = reddit.subreddit(subreddit_name)

timestamp = datetime.now().strftime("%Y%m%d_%H%M%S")

filename = f"{subreddit_name}_data_{timestamp}.txt"

with open(filename, 'w', encoding='utf-8') as f:

for post in subreddit.hot(limit=post_limit):

# Write post title

f.write(f"Title: {post.title}\n")

f.write("-" * 40 + "\n")

# Get comments

post.comments.replace_more(limit=0)

for comment in post.comments[:10]:

f.write(f"{comment.body}\n")

f.write("-" * 40 + "\n")

# Separator between posts

f.write("=" * 80 + "\n\n")

if __name__ == "__main__":

subreddit_name = "SUBREDDIT_NAME" # Enter the subreddit name you want to scrape

scrape_subreddit_titles_comments(subreddit_name)This code is set to capture:

- Post title

- Post comment

It’s also possible to capture info like the reddit user, specific post timestamp, or score. They were excluded to keep the text file lighter.

API Information

There are a few areas that need to be updated using the API access generated earlier.

- client_id: personal use script (under app name)

- client_secret: listed as ‘secret’

- user_agent: name it something unique and meaningful (i.e. subreddit_scraper)

- subreddit_name: the specific subreddit being scraped

Note: Always remove r/ when updating the specific subreddit.

Query Limit

Another customizable aspect of the code is how many subreddit posts to capture.

The post limit is currently set to 1000 (post_limit=1000) however, this could be set lower.

Note: Reddit’s API has rate limits, so larger requests might be throttled.

Saving the PRAW file

Save the file and name it something like Reddit Scraper or Web Scraper, including python file naming convention (.py).

It will end up being something like reddit scraper.py or web scraper.py. This ensures it’s read as a python script.

Now it’s time to run the script.

Step 3: Scrape Reddit Post Comments

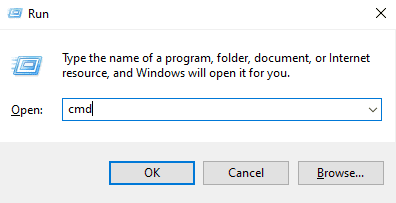

Open Command Prompt

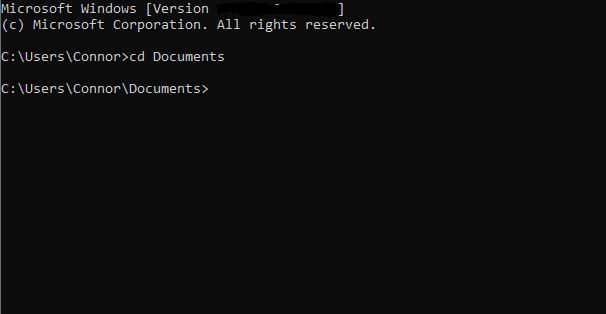

Open command prompt using the keyboard shortcut for command prompt: Windows + R. Type ‘cmd’ into the window.

Open Documents

If the file was saved in your ‘Documents’ folder, type ‘cd Documents’ and press enter. This will open the documents folder.

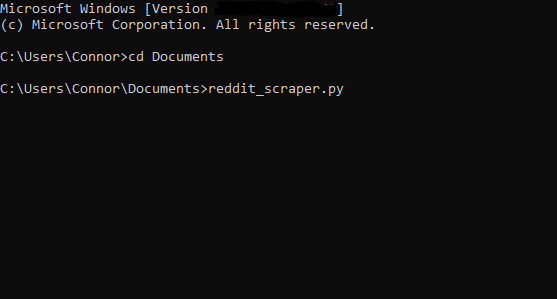

Run PRAW

From here, the PRAW can be accessed using the file name created earlier. Press enter to run the Reddit instance.

This will generate a text document with the scraped data that includes the following:

- Subreddit post

- Comment data

- Nested replies

Locate it in your documents folder (or wherever the python script is saved). Then it’s time to analyze the data using the tool of your choice.

Step 4: Data Extraction & Sentiment Analysis

Upload the text file to any ChatGPT, Gemini, Claude or whichever language model you prefer.

From here, prompt it to pull the following:

- Common topics of discussion

- How many times topics are mentioned

- Jargon or buzzwords that are unique to the audience

- Overall sentiment

Use these data points to create content topics, unique selling propositions, competitive differentiation and more.

Turning Insights into Action:

- For more advanced sentiment analysis techniques, check out How to Turn AI into a Customer Sentiment Analysis Tool

- For specific examples of how to transform these insights into compelling campaigns, see 6 High-Converting Ad Creative Examples for 2025

Gain Insights from Reddit Data with Web Scraping

Learning how to scrape Reddit comments opens up a world of possibilities for market research and content development. The process might seem technical at first – setting up API access, web scraping, and collecting Reddit post comments – but the payoff is worth it. Reddit data provides unfiltered insights that you simply can’t get from traditional market research.

Whether you’re analyzing customer sentiment, researching product feedback, or looking for content ideas, web scraping and AI analysis creates a powerful toolkit for understanding your target audience through authentic user generated content.

So don’t wait. Get scraping and start turning Reddit data into actionable insights for your business.