How to Make Confident Marketing Decisions When the Data Is Incomplete

Modern marketing is built on numbers. Metrics, dashboards, KPIs, and reports are all meant to do one thing: help us make better decisions.

But it wasn’t always this way.

Thirty years ago, before digital channels and real-time analytics, marketing relied on far less information. You ran an ad, waited, and looked at what happened to sales. There was no attribution model, no multi-touch journey, no dashboard lighting up in real time.

And yet, many brands still made excellent decisions.

Even today, despite more data than ever, marketing decisions still rely on judgment, inference, and assumptions. The difference is that uncertainty is now hidden behind layers of metrics.

Confident decision-making in the face of incomplete data is more art than science. It requires knowing the difference between signal and noise, and understanding when directional data is enough. This article outlines a framework for making better marketing decisions when certainty isn’t available — which is most of the time.

TL;DR

- Marketing data will always be incomplete

- Most bad decisions come from false certainty, not lack of data

- Match evidence strength to decision stakes

- Directional data is useful when applied intentionally

- Incrementality is for decisions you can’t afford to guess on

The Data Will Never Be Perfect (And That’s the Point)

It’s uncomfortable to make decisions with incomplete data. But in marketing, uncertainty isn’t a temporary problem – it’s a permanent condition.

Attribution is fundamentally a model, not the truth. No matter how sophisticated platforms like Google or Meta become, attribution can only approximate reality.

Conversion lag is baked into user behavior and short attribution windows like 7-days on Facebook only make this gap even wider

Uncontrollable factors like seasonality, promotions, inventory, creative fatigue, and a whole slate of environmental factors (who knew weather would effect buying decisions so much) blur the lines of causation and correlation.

Modern privacy constraints add another layer of uncertainty. Even when tracking infrastructure is perfect, data is lost to cross-device behavior, consent, and offline purchase behavior.

Your job isn’t to eliminate uncertainty. It’s to make good decisions with it.

The 5 Traps That Create “Confident” but Wrong Decisions

These traps exist because they feel productive. In the absence of certainty, they offer clarity – just not the kind you want.

Before making a decision, check yourself against this list.

1. Last-click Certainty

Last-click attribution dominates most ad platforms. It’s useful for platform optimization, but it lacks the depth needed for strategic decisions. Treating last-click performance as truth creates a false sense of confidence.

2. Metric Hopping

Most marketers are guilty of this. When results disappoint, it’s tempting to switch KPIs instead of confronting reality. Progress only happens when you commit to a goal, measure trends over time, and adjust strategy honestly.

3. Platform Monoculture

Relying on a single data source because it’s convenient – often Google Analytics – introduces bias. Strong analysis requires multiple perspectives that act as checks and balances.

4. Short-Window Bias

A common mistake with junior level marketers is making changes too quickly. Judging performance too quickly ignores conversion lag and normal volatility. Short time windows exaggerate noise and lead to reactive decisions that erase learning.

That’s why I structure weekly reports with trend charts that include weeks or months of data.

5. Correlation vs Causation

Naming a cause without ruling out alternatives is the quickest path to poor judgement. Causality in marketing is rare and must be earned, not assumed.

These traps provide context for the framework that follows.

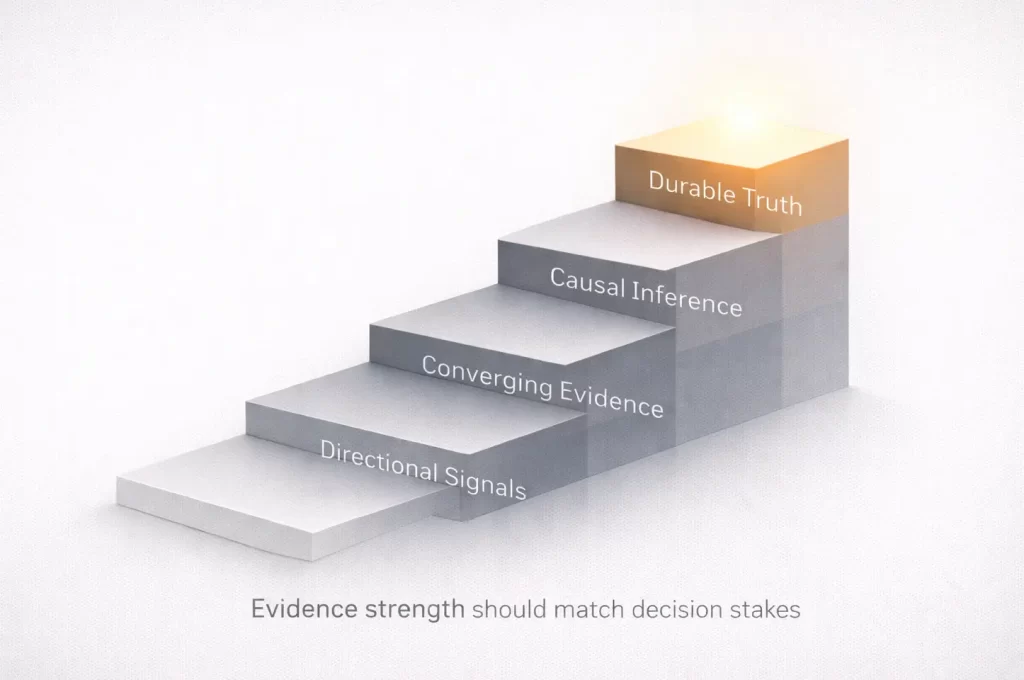

The Confidence Ladder (How Strong Does Your Evidence Need To Be?)

Not every decision requires the same level of certainty. The strength of your evidence should match the stakes of the decision.

Directional Signals (Fast, Imperfect)

These are primarily ad platform metrics like spend, CTR, CPC, or attributed conversions. They act as leading indicators and are useful for quick reads.

Use directional signals for creative iteration, early performance checks, and low-risk optimizations. Be aware that platform bias and noise are always present.

Converging Evidence (Multiple Sources)

Converging evidence brings multiple data sources together – ad platforms, GA4, CRM systems, or offline sales data.

When independent sources tell a similar story, confidence increases. This level is appropriate for budget reallocations and scaling decisions where mistakes are still reversible.

Causal Inference (Incremenatlity Tests)

Causal inference moves beyond observation into testing. Lift tests, geo experiments, synthetic controls, and holdouts help isolate true impact.

These methods are more complex, but they’re essential for new channel evaluation, major investments, and decisions with high downside risk.

Durable Truth (MMM + Repeated Tests)

At the highest level, repeated experimentation and systems like media mix modeling create durable insights. These approaches inform long-term strategy rather than day-to-day optimization.

Use this level for annual planning, portfolio allocation, and market-level decisions.

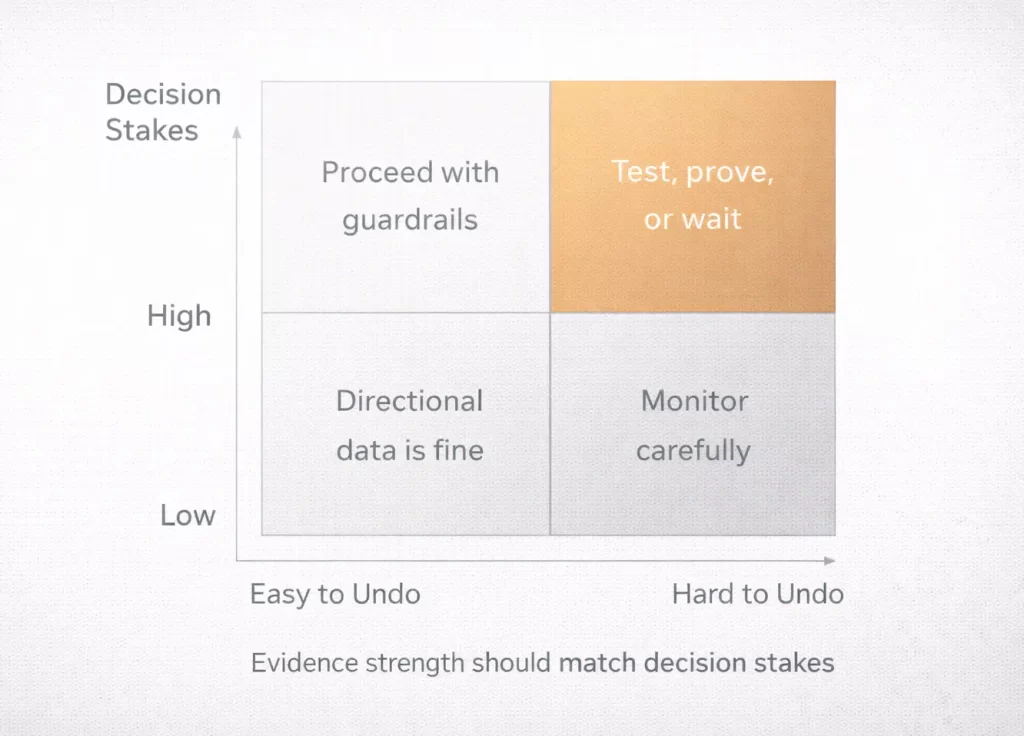

A Decision Tree for Real Life (Not Theory)

Frameworks only matter if they work in practice.

Start with the decision that’s being made:

- Creative decision

- Campaign structure decision

- Budget shift decision

- Channel investment decision

- Tracking/measurement decision

Next, assess the stakes. If you’re wrong, what’s the cost?

- Low

- Medium

- High

Then consider reversibility. How long would it take to undo the decision?

- Days

- Weeks

- Months

Large strategic changes take longer to reverse than tactical tweaks. Once stakes and reversibility are clear, choose your evidence level:

Once these are determined, choose your evidence level.

- Low cost + reversible → Directional data is fine

- High cost + irreversible → Causal or wait

Decision Template

| Decision | |

|---|---|

| Stakes | |

| Reversibility | |

| Evidence Needed | |

| Time Horizon | |

| Next Action |

Directional Data Is Fine If You Use It Like a Scientist

Directional data isn’t useless – it’s just often misapplied. A single CPA number lacks context, but changes in CPA over time can be meaningful.

When working with directional data:

- Define the decision before looking at the data

- Use aggregates, not point estimates

- Look for convergence

In creative testing, this means setting expectations in advance. If the goal is a 5% efficiency improvement, evaluate performance across a portfolio of ads rather than a single winner.

If hook rate improves, CTR holds steady, and CPA trends down, the signal is likely real.

The same logic applies to funnel analysis. If lead volume increases but quality declines, scaling is the wrong move. Fix the constraint first.

Incrementality Is for Decisions You Can’t Afford to Guess On

Incrementality isn’t about optimization. It’s about certainty.

When attribution and business outcomes diverge, directional signals stop being enough. Incrementality testing focuses on contribution rather than credit.

Common situations where incrementality is necessary:

- Performance looks strong but business impact is flat

- Cannibalization between campaigns (brand, PMax, retargeting)

- Scaling hits a ceiling with no explanation

- Platforms disagree wildly

These are moments where guessing is expensive. Don’t just rely on attribution.

How Incrementality Contributes to Reporting

Most reporting fails because it tries to explain everything at once.

Effective reports answer four questions:

- What changed?

- Why might it have changed?

- So what?

- Now what?

Incrementality tests simplify this process by isolating impact in controlled environments. While they aren’t part of weekly reporting, they provide powerful context on a monthly or quarterly cadence.

Sometimes the Best Move Is to Wait

There’s a difference between patience and paralysis. Acting too quickly often destroys the opportunity to learn.

Focus on a minimum time to learn. If conversion lag is 2 weeks, judging results after 2 days guarantees noise.

Likewise, avoid drawing conclusions from tiny samples. Low spend and low volume require more time, not faster reactions.

A Practical Cadence for Analysis

Over-analysis kills insight. A clear cadence protects learning.

- Daily: Budget pacing, delivery

- Weekly: Conversion trends, ad performance

- Monthly: Bigger reallocations, tactic pauses, test reviews

- Quarterly: Channel portfolio, new strategy, creative concepts

Automate daily and weekly checks whenever possible. The less time spent monitoring noise, the more time available for real decisions.

The Point Isn’t Better Data. It’s Better Judgment.

Marketing will never provide perfect information. The teams that perform best don’t wait for certainty. They design decision systems that work without it.

When evidence strength matches decision stakes, false confidence traps are avoided, and learning is given time to happen, imperfect data stops being a limitation.

It becomes an advantage.